Ever pulled your chair up to a colleague’s desk to pair on some code or debug a problem? Multi's meant to be the virtual equivalent of just that.

We’ve built many collaborative features that mimic things you can do at the same desk, like pointing, drawing, or scribbling notes—and most of the time that’s all you need. But sometimes, you just need to grab the mouse and keyboard. That’s why we built remote control. Here’s how.

Requirements

In a nutshell, remote control should feel like you’re working directly on the computer you’re controlling. Our early version covers:

Handling mouse and keyboard events, including modifiers

Keeping latency as low as possible

Letting the local user regain control by “short-circuiting” the remote control

A handshake/security system between the presenter and the controller

Intuitive to understand who’s acting

Implementation

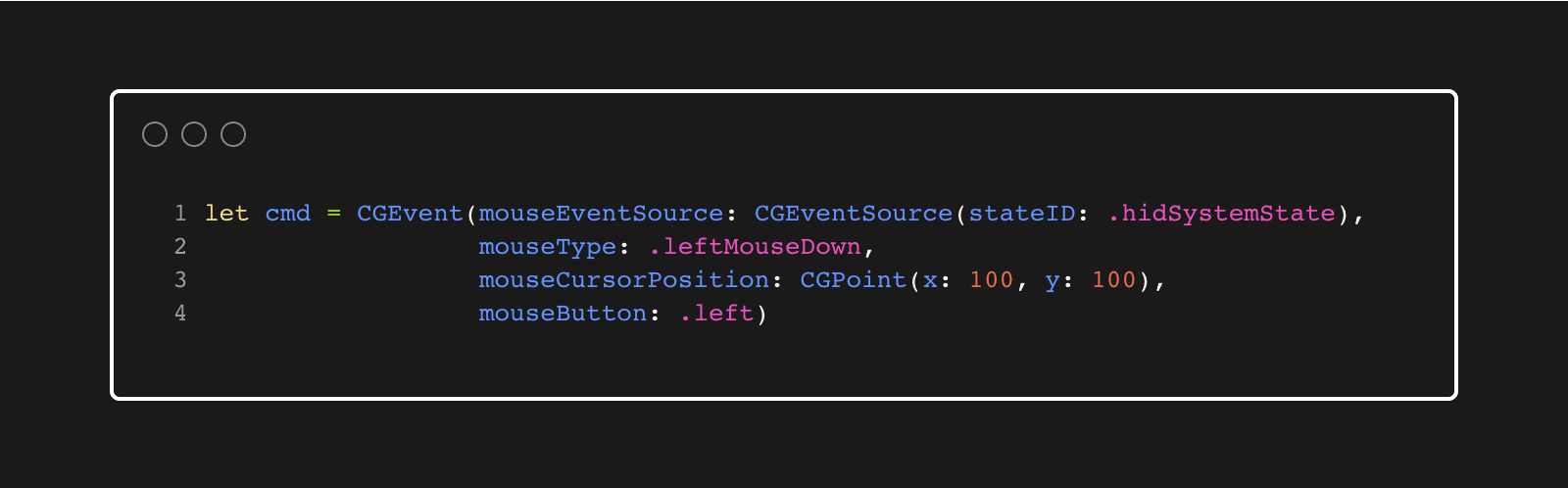

We need a method for the remote control input commands to be programmatically passed to macOS. Because we want the remote control to feel as native as possible, we want to hook into the system at the levels closest to the OS as possible. That means creating and sending [CGEvents]. CGEvents are part of the Core Graphics framework and allow us to create both cursor and mouse events. Here’s what a creating a CGEvent that represents a mouse down event looks like:

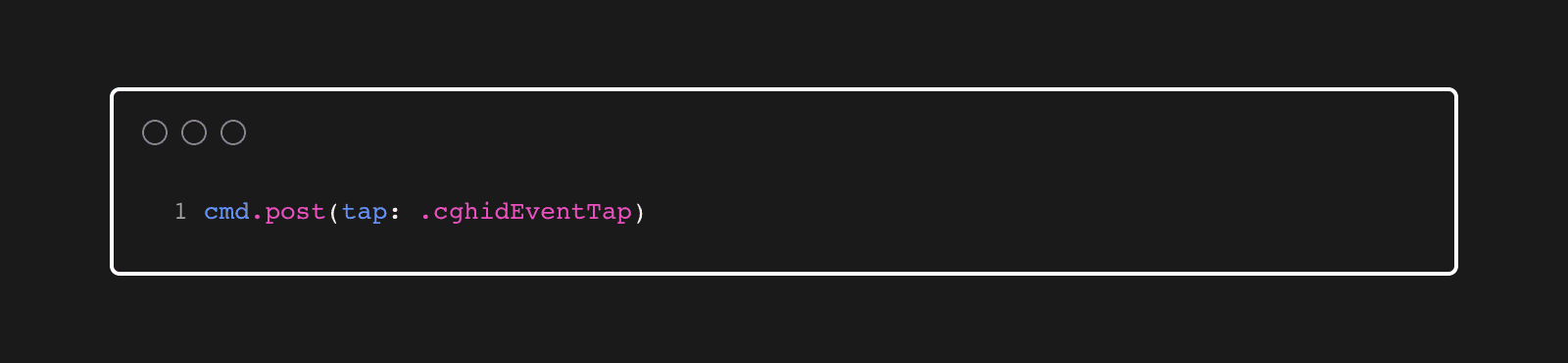

Then, we can post that event to the system (note that your app will need to have Accessibility permission granted from the user for this to work):

For remote control events, the above CGEvent(mouseEventSource… initializer will be used for most mouse events and the CGEvent(keyboardEventSource… is used for keyboard events.

💡 There’s also a CGWarpMouseCursorPosition API that can be used to move the mouse — this API bypasses CGEvent taps. We don’t use this API, since we rely on the short-circuit mechanism described later on.

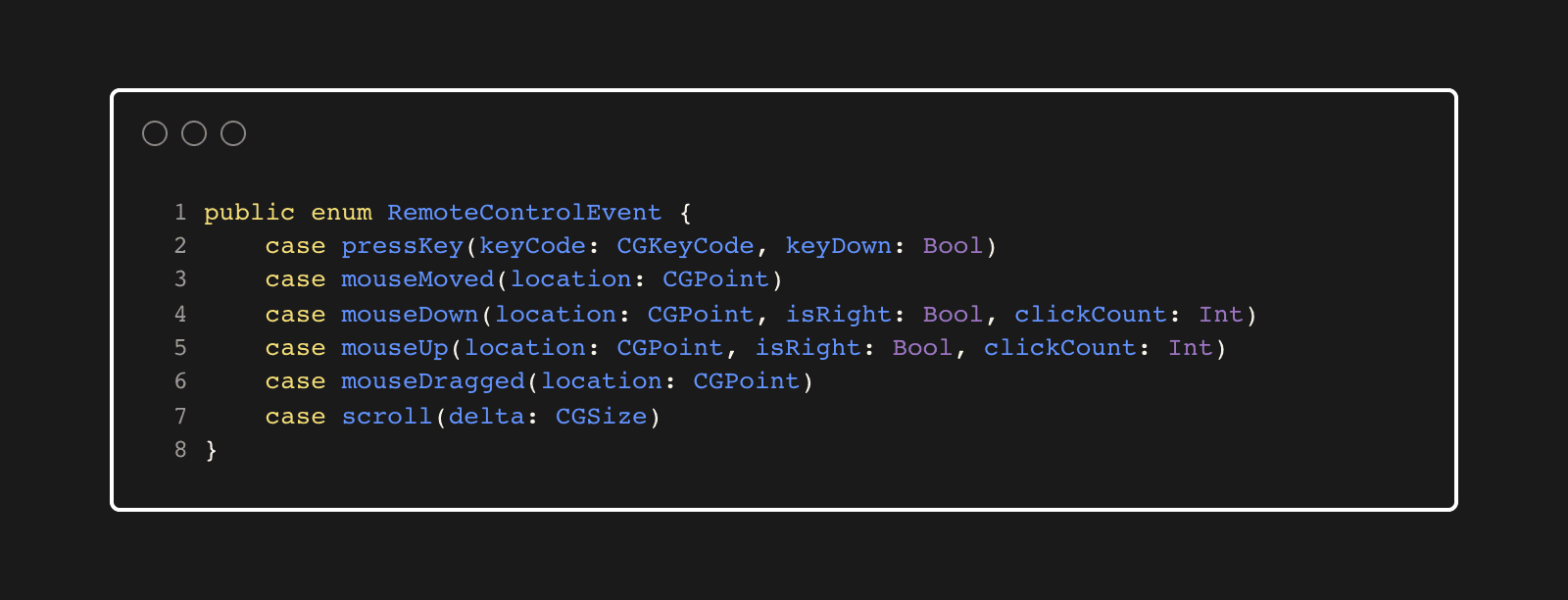

In Remotion, we pass through the following event types from the controller to the receiver:

Key up/down

Left/right mouse up/down (Note that double clicks are unique events and must be handled separately from single clicks)

Mouse dragged

Scroll

In our API, these possibilities are represented by an enum:

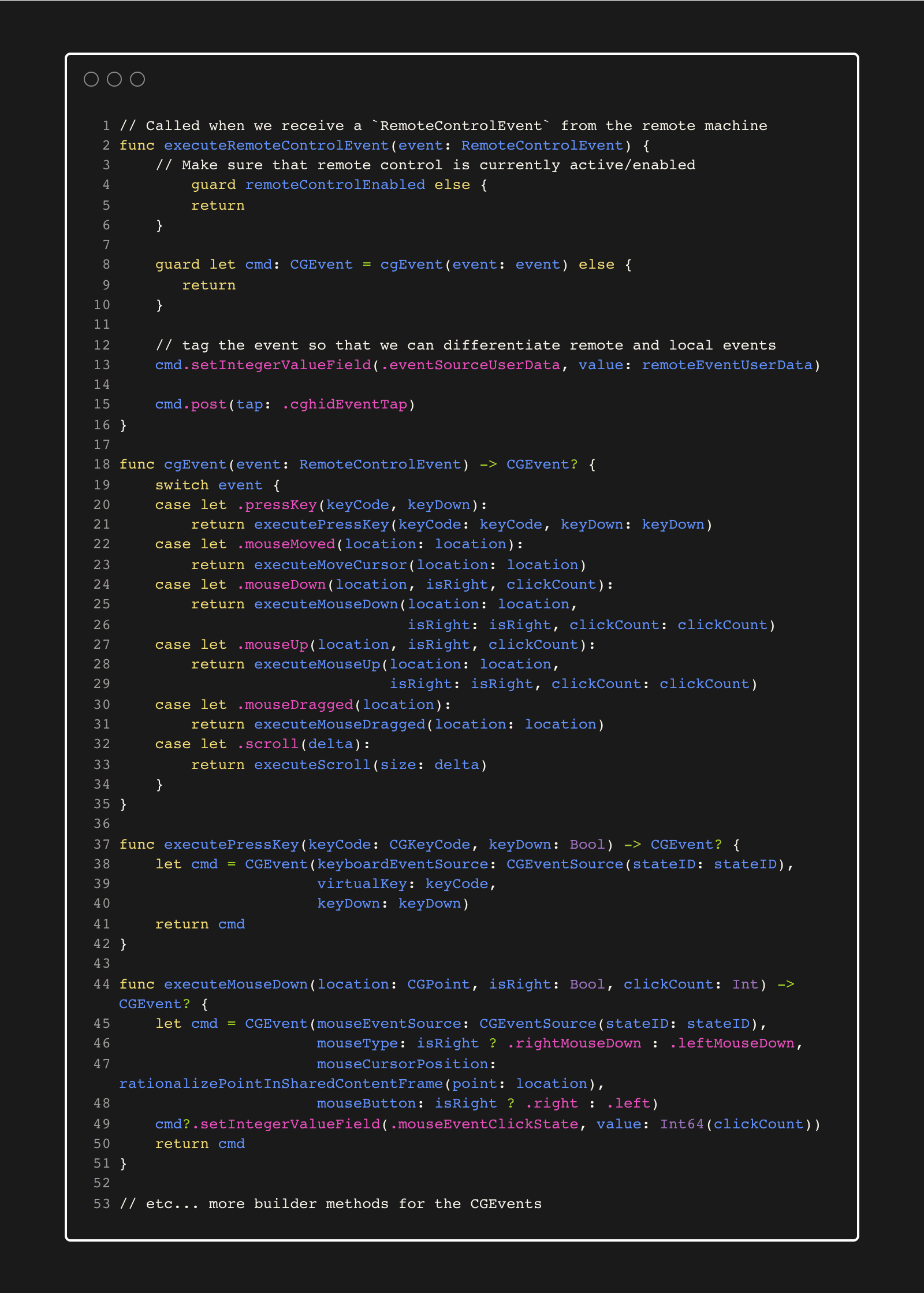

This ends up looking something like this when we put the pieces together:

If you’re reading carefully, you may have notice that none of this code handles modifier keys, which are needed for any real workflow. They’re tricky to get right and we’re still iterating on our approach. A topic for a future blog post!

Short circuiting

When a remote control session is active, it’s very important that the local user can regain control of their own machine. First and foremost, it’s a security issue — you need to be able to stop a bad actor from executing malicious actions. But, secondly, even in a high-trust environment, it’s important that the local user’s input can override the remote input so that if both users are trying to control at the same time, the events aren’t “fighting” each other, creating things like cursor jitter as the system tries to pull the mouse to two disparate locations, for example.

In order to create a short-circuit, we need to:

Listen for events from the system

Be able to differentiate truly local events (eg events that the local user instantiated themselves using the mouse and cursor) vs events that we created programmatically on behalf of the remote user.

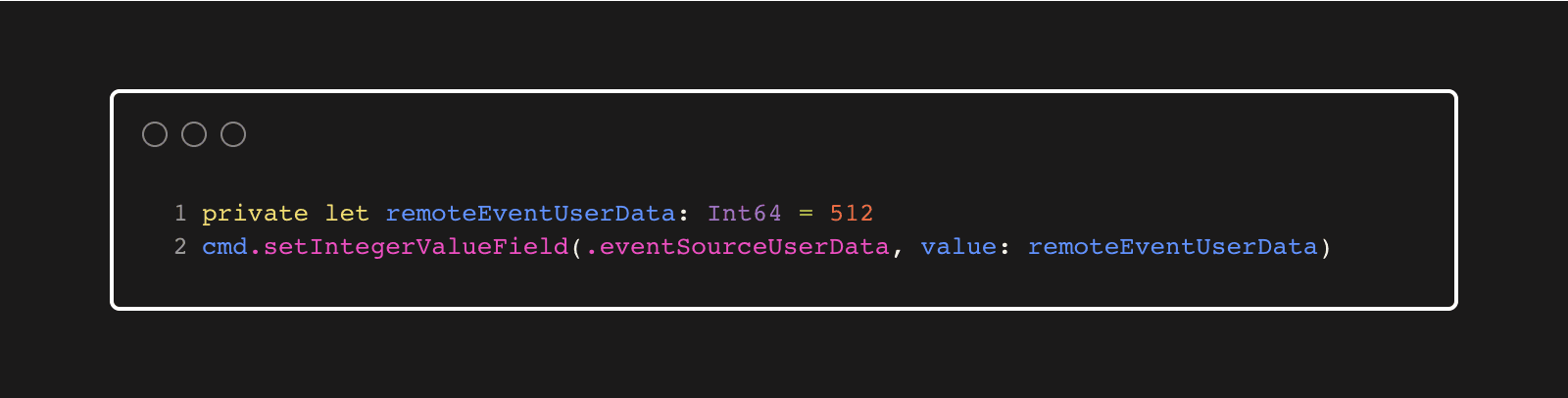

You may have noticed the following line from the code sample above:

This is the key to the second point from above in our system. CGEvent allows us to set arbitrary data on the event using the .eventSourceUserData field, which the documentation describes as: Key to access a field that contains the event source user-supplied data, up to 64 bits.

None of the truly local events will have this field set, so we can guarantee that if the field is present, it’s a remote event, and when our listener catches it, we should not execute a short circuit.

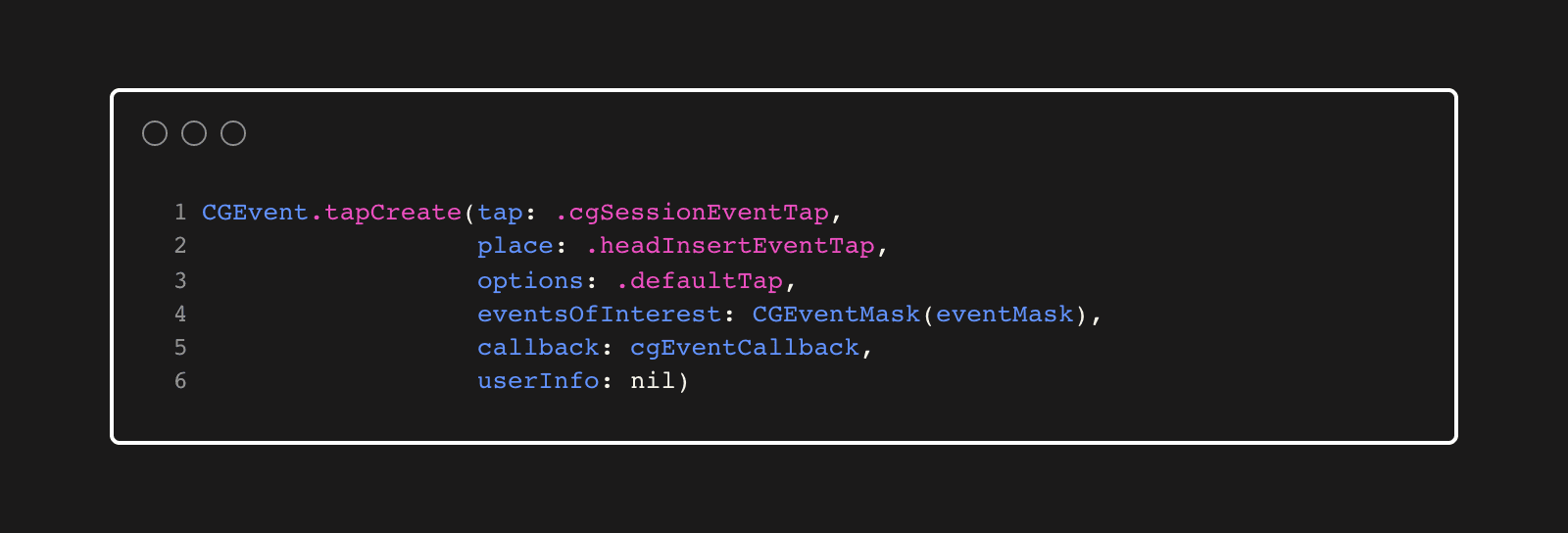

To listen for these events, we use a CGEvent tap:

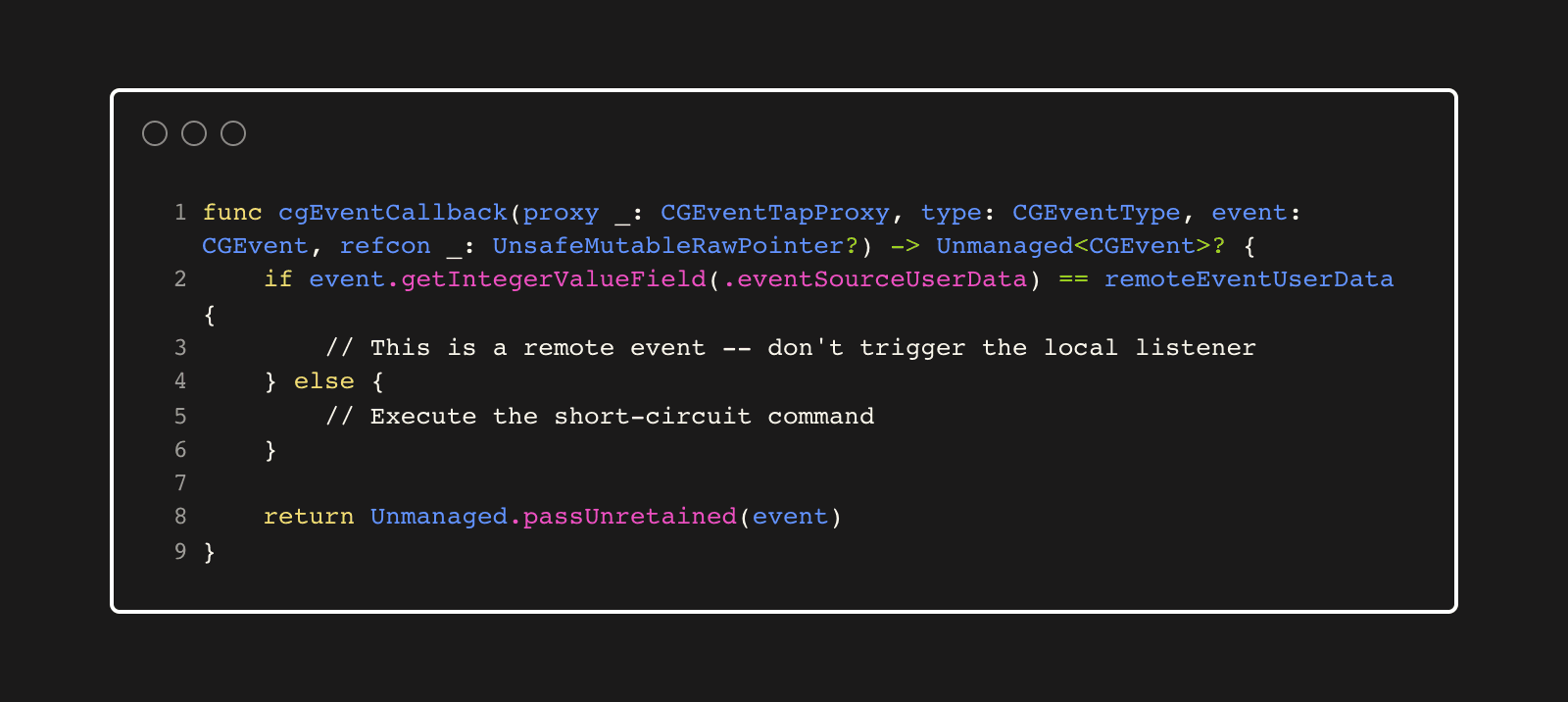

Inside the callback, we check the user data field we set earlier:

Conclusion

The code above gives us the first building blocks of our remote control engine in Remotion. On top of that basic engine, there are other integral layers, such as the “handshake” mechanism that connects the controller and receiver and the pipe that sends the remote control commands over the network.

John Nastos is a macOS engineer at Remotion. He lives in Portland, Oregon where he also has an avid performing career as a jazz musician. You can find him on Twitter at @jnpdx where he'll happily answer questions about this post and entertain suggestions for future articles.